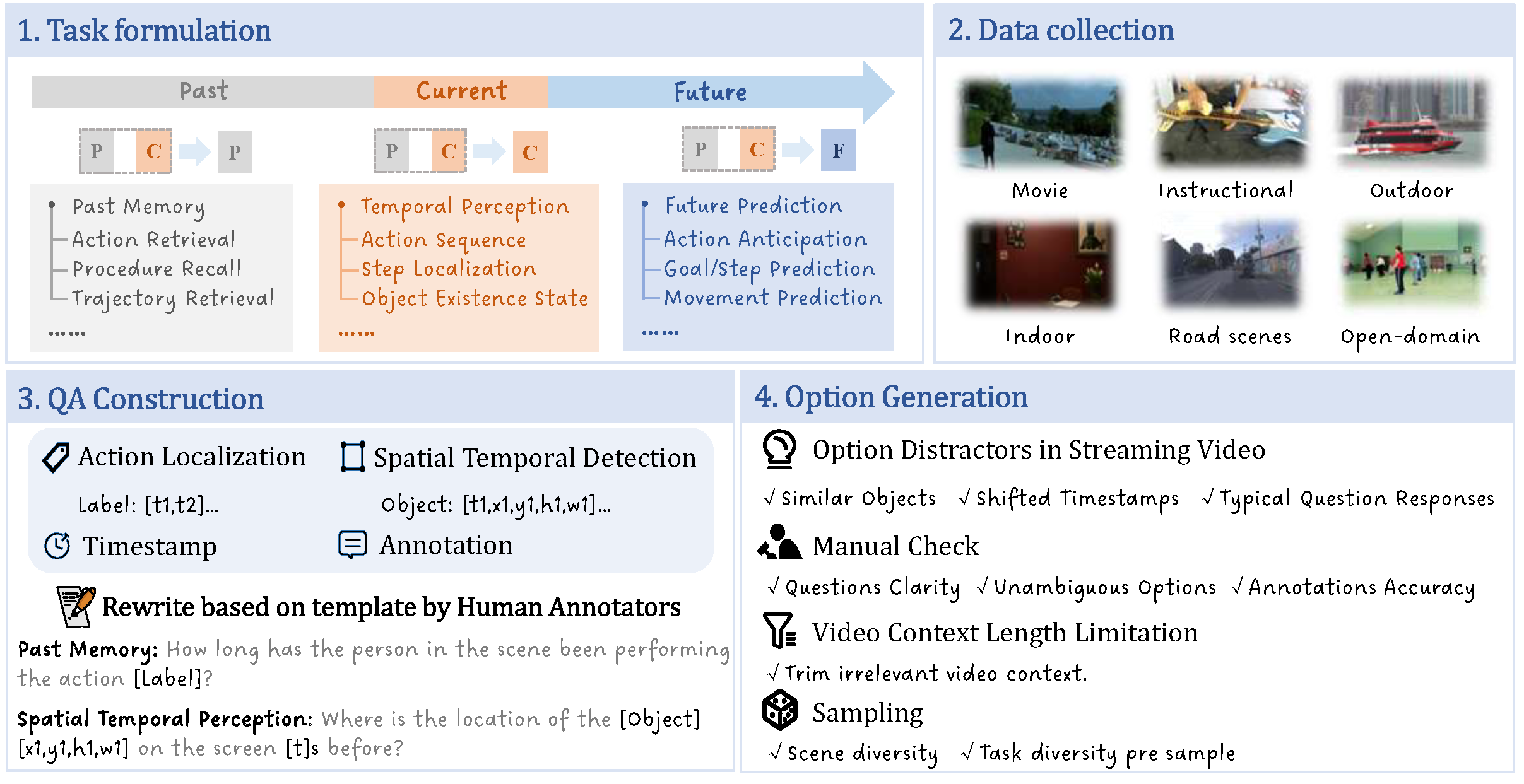

FP:Future Prediction AA: Action Anticipation GSP: Goal/Step Prediction MP: Movement Prediction

THV: Temporal Hallucination Verification AP: Action Persistence SV: Step Verification OV: Object Presence

PM: Past Memory AR: Action Retrieval PR: Procedure Recall TR: Trajectory Retrieval

SP: Spatio Perception AL: Action Location OP: Object Position

STP: Spatio-Temporal Perception AT: Action Trajectory OT: Object Trajectory

TP: Temporal Perception AS: Action Sequence SL: Step Localization OES: Object Existence State

By default, this leaderboard is sorted by overall Accuracy scores. To view other sorted results, please click on the corresponding cell.

| # | Task Name Subset Name |

Size | AVG | FP | THV | PM | SP | STP | TP | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| AA | GSP | MP | AP | SV | OP | AR | PR | TR | AL | OP | AT | OT | AS | SL | OES | ||||

| Gemini-1.5-Flash

|

- | 50.7 | 71.4 | 53.6 | 21.9 | 56.5 | 60.8 | 40.6 | 36.7 | 47.9 | 62.5 | 32.3 | 37.5 | 87.0 | 50.0 | 83.3 | 22.3 | 46.9 | |

| InternVL2

Shanghai AI Lab |

7B | 48.7 | 52.6 | 60.2 | 27.6 | 57.5 | 52.0 | 58.5 | 38.8 | 67.1 | 58.3 | 38.1 | 31.3 | 87.4 | 37.0 | 75.4 | 31.4 | 5.9 | |

| InternVL2

Shanghai AI Lab |

4B | 44.1 | 57.7 | 57.0 | 14.4 | 59.2 | 49.4 | 60.0 | 30.3 | 61.8 | 46.3 | 30.9 | 20.1 | 83.0 | 32.3 | 70.7 | 29.4 | 3.4 | |

|

LLaMA-VID

CUHK |

7B | 41.9 | 43.6 | 50.9 | 19.6 | 64.0 | 47.5 | 46.8 | 29.4 | 48.9 | 51.2 | 31.9 | 11.2 | 75.7 | 24.8 | 59.1 | 26.0 | 40.0 | |

|

LLaVA-OneVision

Bytedance |

7B | 49.5 | 68.0 | 62.7 | 35.9 | 58.4 | 50.3 | 46.5 | 29.4 | 60.7 | 58.0 | 43.1 | 14.2 | 86.5 | 49.7 | 70.7 | 28.1 | 30.2 | |

| LongVA

LMMs-Lab |

7B | 43.6 | 64.1 | 56.5 | 29.5 | 54.9 | 51.9 | 34.8 | 35.3 | 55.6 | 57.7 | 31.6 | 3.4 | 67.4 | 44.7 | 80.0 | 26.7 | 4.0 | |

| MiniCPM-V 2.6

OpenBMB |

7B | 39.1 | 33.3 | 35.9 | 15.0 | 59.2 | 50.8 | 55.1 | 25.0 | 37.4 | 41.7 | 26.6 | 11.8 | 98.3 | 36.3 | 66.1 | 26.4 | 6.2 | |

| Qwen2-VL

Alibaba |

7B | 49.7 | 60.3 | 66.1 | 22.1 | 54.9 | 51.5 | 51.1 | 37.8 | 64.4 | 69.3 | 35.3 | 28.5 | 97.0 | 49.4 | 65.1 | 30.8 | 11.7 | |

|

LITA

NVIDIA |

7B | 20.4 | 19.2 | 24.5 | 19.9 | 40.8 | 48.9 | 24.9 | 3.1 | 27.3 | 6.4 | 6.9 | 14.6 | 35.2 | 23.9 | 27.4 | 0.5 | 3.4 | |

| TimeChat

PKU |

7B | 12.8 | 7.7 | 15.3 | 18.7 | 20.6 | 15.7 | 11.7 | 9.1 | 14.7 | 9.8 | 7.5 | 19.5 | 13.9 | 10.3 | 9.3 | 10.1 | 10.8 | |

| VTimeLLM

THU |

7B | 33.1 | 37.2 | 23.4 | 15.0 | 64.8 | 43.8 | 53.2 | 25.9 | 38.8 | 32.5 | 25.9 | 20.4 | 40.9 | 6.8 | 48.4 | 43.5 | 8.6 | |

| VideoChat-Online

Ours |

4B | 53.9 | 56.4 | 63.0 | 15.6 | 57.1 | 57.9 | 61.9 | 39.1 | 54.2 | 73.9 | 41.3 | 29.7 | 92.2 | 53.1 | 69.8 | 27.3 | 69.9 | |

| ⭐ VideoLLM-Online

NUS |

7B | 9.6 | 0.0 | 1.8 | 20.9 | 5.2 | 5.9 | 32.6 | 0.0 | 2.3 | 26.7 | 0.6 | 26.6 | 0.9 | 19.9 | 0.9 | 1.7 | 8.3 | |

| ⭐ MovieChat

ZJU |

7B | 30.9 | 23.1 | 27.5 | 23.6 | 58.4 | 43.9 | 40.3 | 25.6 | 31.1 | 23.9 | 26.9 | 39.6 | 24.4 | 28.9 | 29.3 | 25.5 | 21.9 | |

| ⭐ Flash-Vstream

THU |

7B | 31.2 | 26.9 | 37.6 | 23.9 | 60.1 | 41.9 | 40.0 | 23.4 | 35.3 | 26.1 | 24.7 | 28.8 | 27.0 | 21.4 | 29.8 | 25.6 | 26.8 | |

| ⭐ VideoChat-Online

Ours |

4B | 54.9 | 64.1 | 59.7 | 16.6 | 63.1 | 58.3 | 62.8 | 42.2 | 54.4 | 70.6 | 54.1 | 24.8 | 88.7 | 48.5 | 73.0 | 25.9 | 71.7 | |

⭐: indicates the input is streaming video -: indicates "unknown" for closed-source models